Maksym DelI am a postdoc at the Estonian Center of AI Excellence and Natural Language Processing group at the University of Tartu. Currently, I am working on improving practical reliability of frontier LLMs (uncertainty, CoT monitoring) and evaluating thier capabilites. During my PhD I worked on mechanistic understanding of cross-lingual generalization and LLM evals. My research interests include ensuring AI trustworthiness and safety more broadly by developing moodel control, monitoring, and interpretability measures. Google Scholar / LinkedIn / GitHub / Twitter (X) / Email |

|

ResearchMy research interests include ensuring AI trustworthiness and safety more broadly by developing moodel control, monitoring, robustness, and interpretability measures. |

|

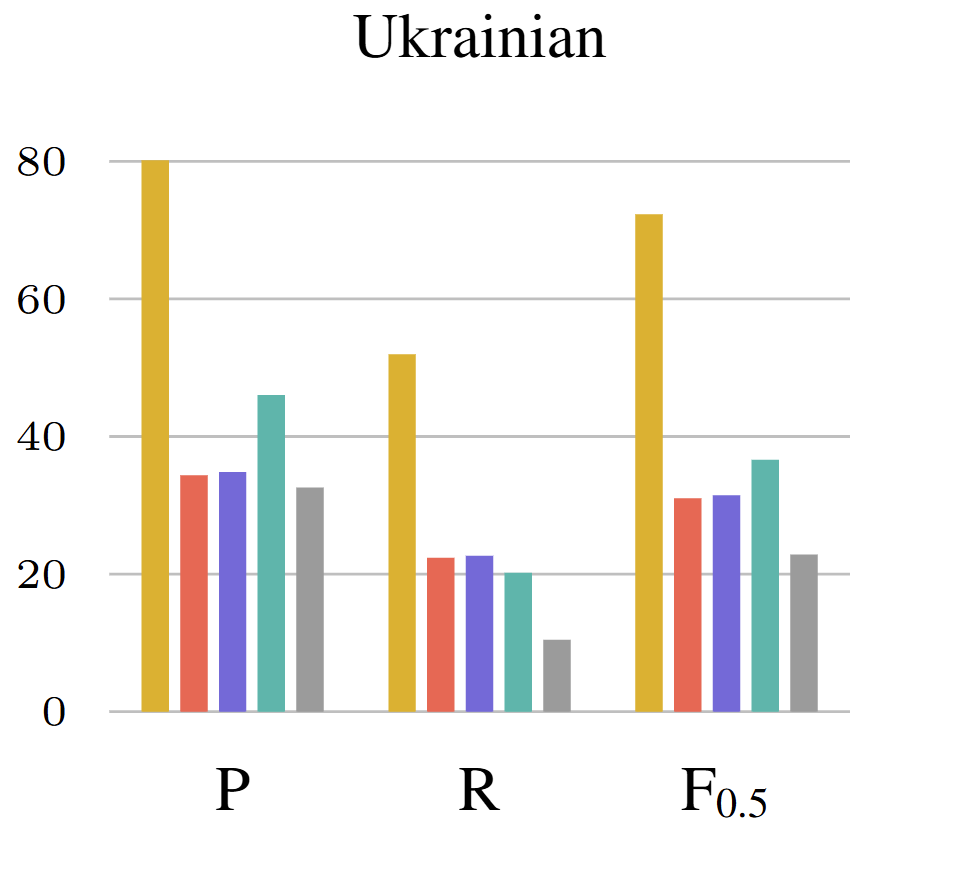

To Err Is Human, but Llamas Can Learn It TooAgnes Luhtaru*, Taido Purason*, Martin Vainikko, Maksym Del, Mark Fishel EMNLP Findings, 2024 arxiv / We show how to effectively use large langauge models for artificial error generation and grammatical error correction. |

|

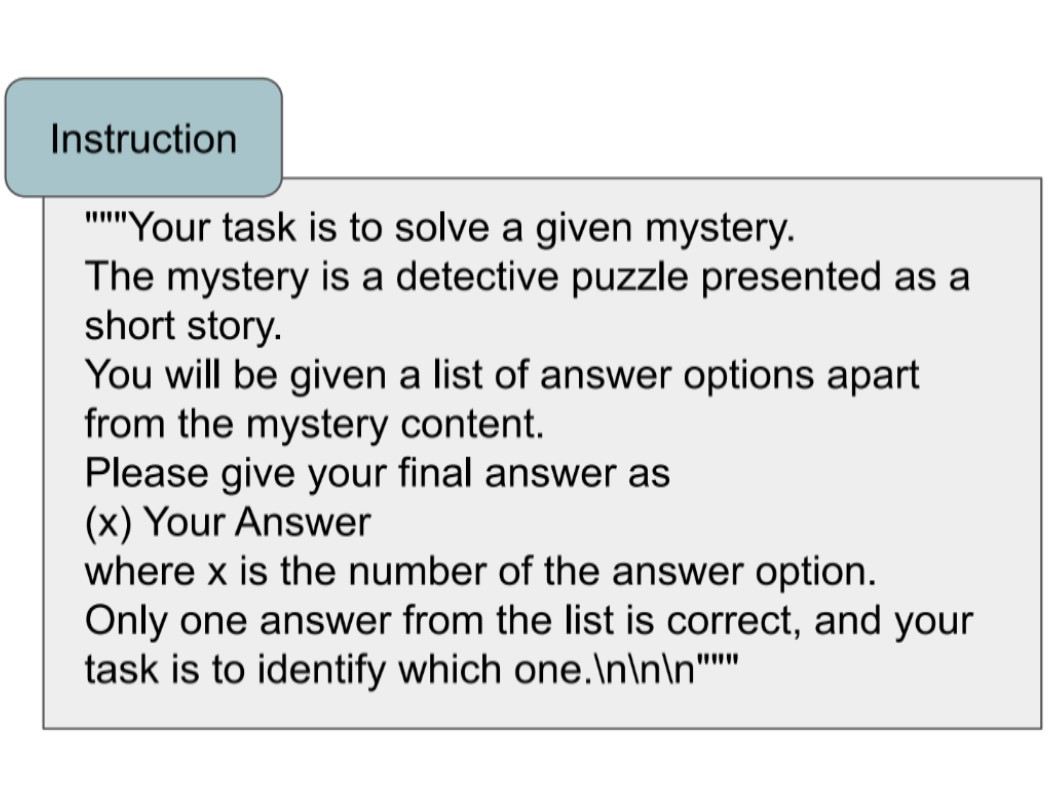

True Detective: A Deep Abductive Reasoning Benchmark Undoable for GPT-3 and Challenging for GPT-4Maksym Del, Mark Fishel *SEM, 2023 paper / code / We show that GPT-4 cannot reliably solve short-form detective puzzles, even when given a chain of thought reasoning trace hinting at the correct answer. |

|

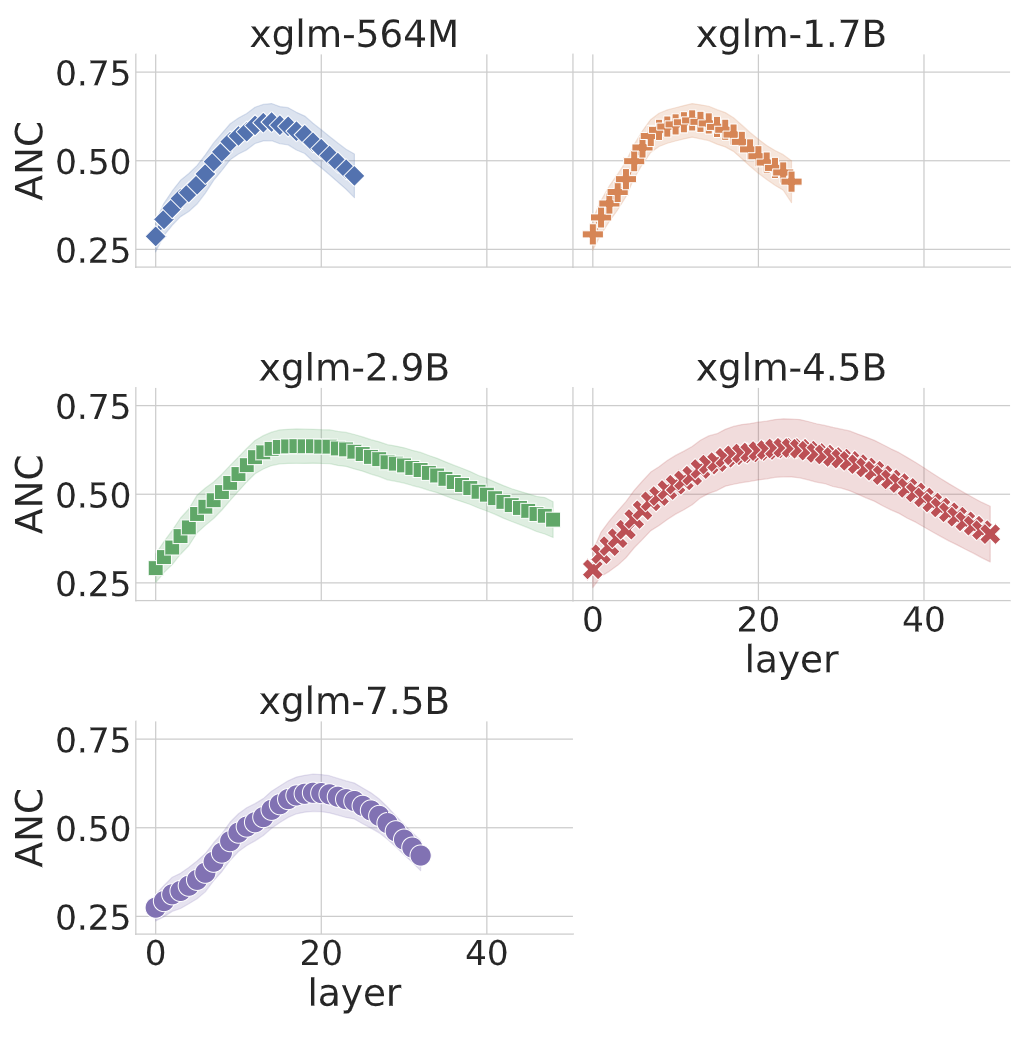

Cross-lingual Similarity of Multilingual Representations RevisitedMaksym Del, Mark Fishel AACL, 2022 paper / code / We demonstrate the universality of the internal cross-lingual structure across multilingual language models. |

|

Similarity of Sentence Representations in Multilingual LMs: Resolving Conflicting Literature and a Case Study of Baltic LanguagesMaksym Del, Mark Fishel BJMC, 2022 paper / code / We address confsuion in prior work regarding the internal cross-slingual structure in multilingual language models. |

|

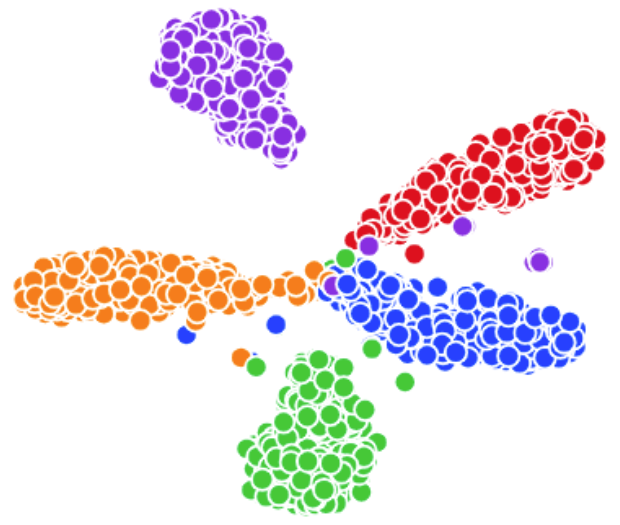

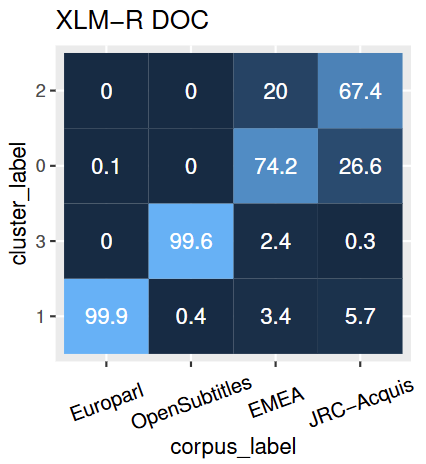

Translation Transformers Rediscover Inherent Data DomainsMaksym Del*, Elizaveta Korotkova*, Mark Fishel WMT, 2021 paper / code / We show that translation transformers keep internal domain representaions apart. |

|

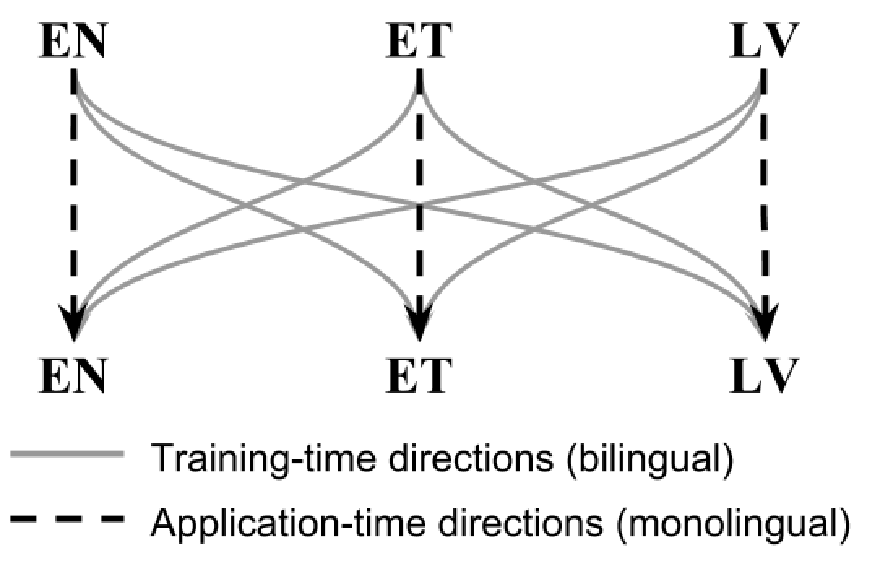

Grammatical Error Correction and Style Transfer via Zero-shot Monolingual TranslationElizaveta Korotkova, Agnes Luhtaru, Maksym Del, Krista Liin, Daiga Deksne, Mark Fishel preprint, 2019 arxiv / We present an approach that does both grammatical error correction and style transfer with a single multilingual translation model out-of-the-box. |

|

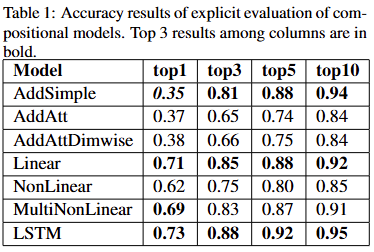

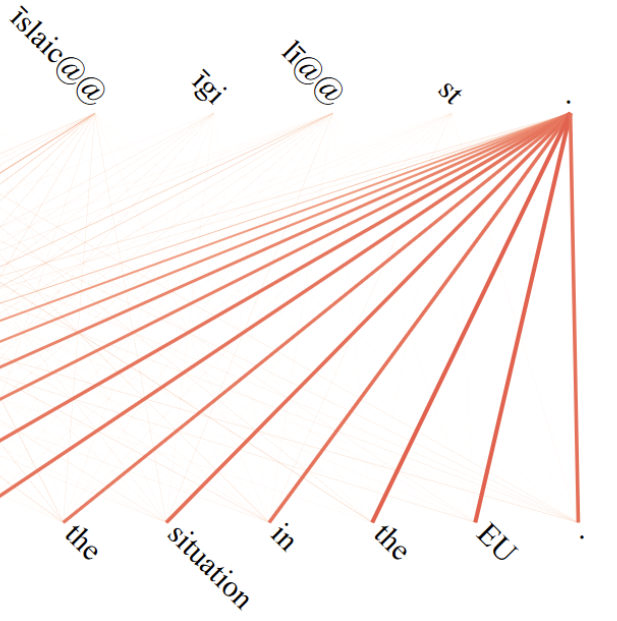

Phrase-based Unsupervised Machine Translation with Compositional Phrase EmbeddingsMaksym Del, Andre Tattar, Mark Fishel WMT, 2018 paper / We propose compositional phrase embeddings for unsupervised machiene translation. |

|

C-3MA: Tartu-Riga-Zurich Translation Systems for WMT17Matīss Rikters, Chantal Amrhein, Maksym Del, Mark Fishel WMT, 2017 paper / code / We describe the neural machine translation systems of the University of Latvia, University of Zurich and University of Tartu submitted as a part of the WMT17 shared task. |

|

Design and source code from Jon Barron's website |